Abstract

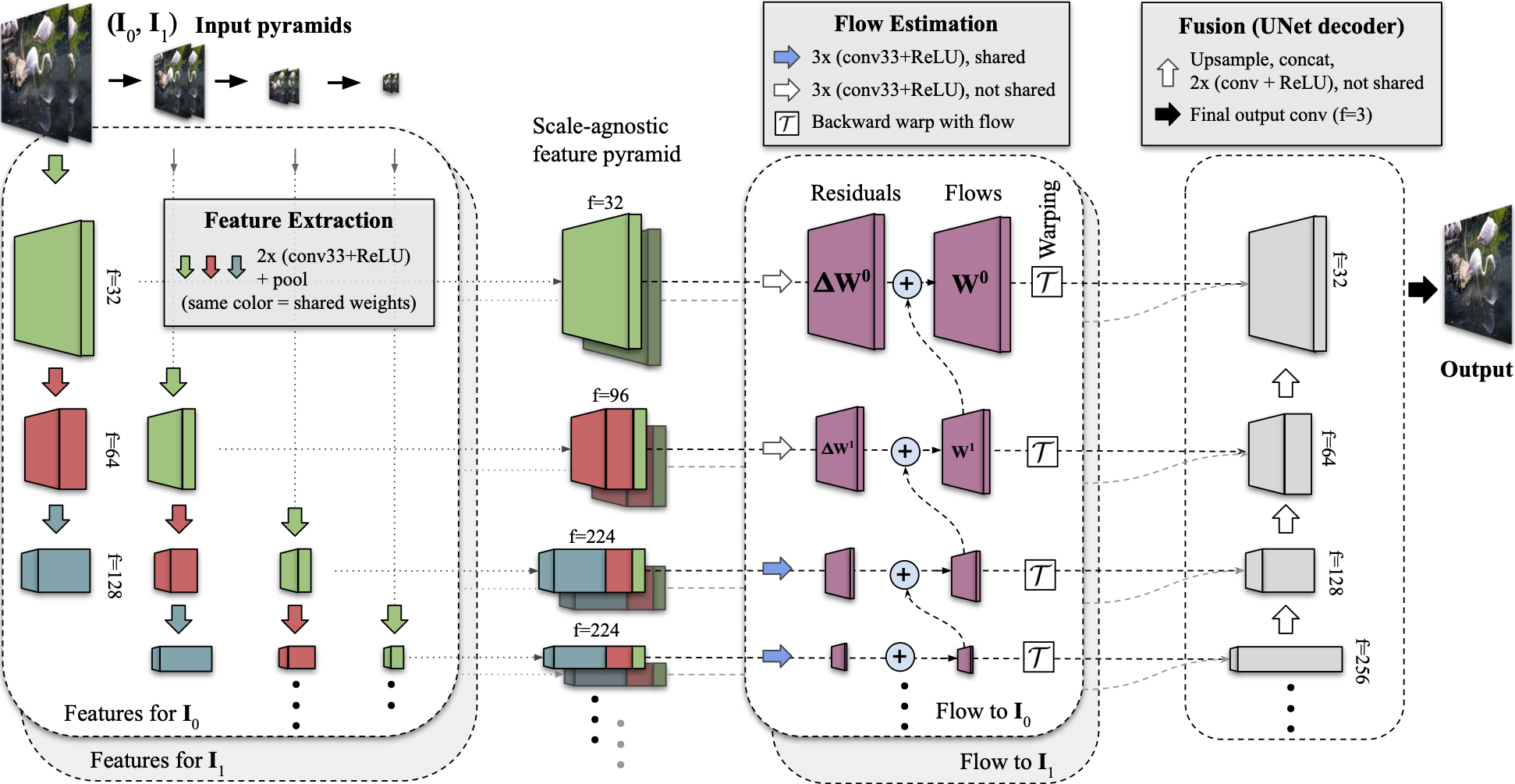

We present a frame interpolation algorithm that synthesizes an engaging slow-motion video from near-duplicate photos which often exhibit large scene motion. Near-duplicates interpolation is an interesting new application, but large motion poses challenges to existing methods. To address this issue, we adapt a feature extractor that shares weights across the scales, and present a "scale-agnostic" motion estimator. It relies on the intuition that large motion at finer scales should be similar to small motion at coarser scales, which boosts the number of available pixels for large motion supervision. To inpaint wide disocclusions caused by large motion and synthesize crisp frames, we propose to optimize our network with the Gram matrix loss that measures the correlation difference between features. To simplify the training process, we further propose a unified single-network approach that removes the reliance on additional optical-flow or depth network and is trainable from frame triplets alone. Our approach outperforms state-of-the-art methods on the Xiph large motion benchmark while performing favorably on Vimeo-90K, Middlebury and UCF101. Codes and pre-trained models are available at github.com/google-research/frame-interpolation.

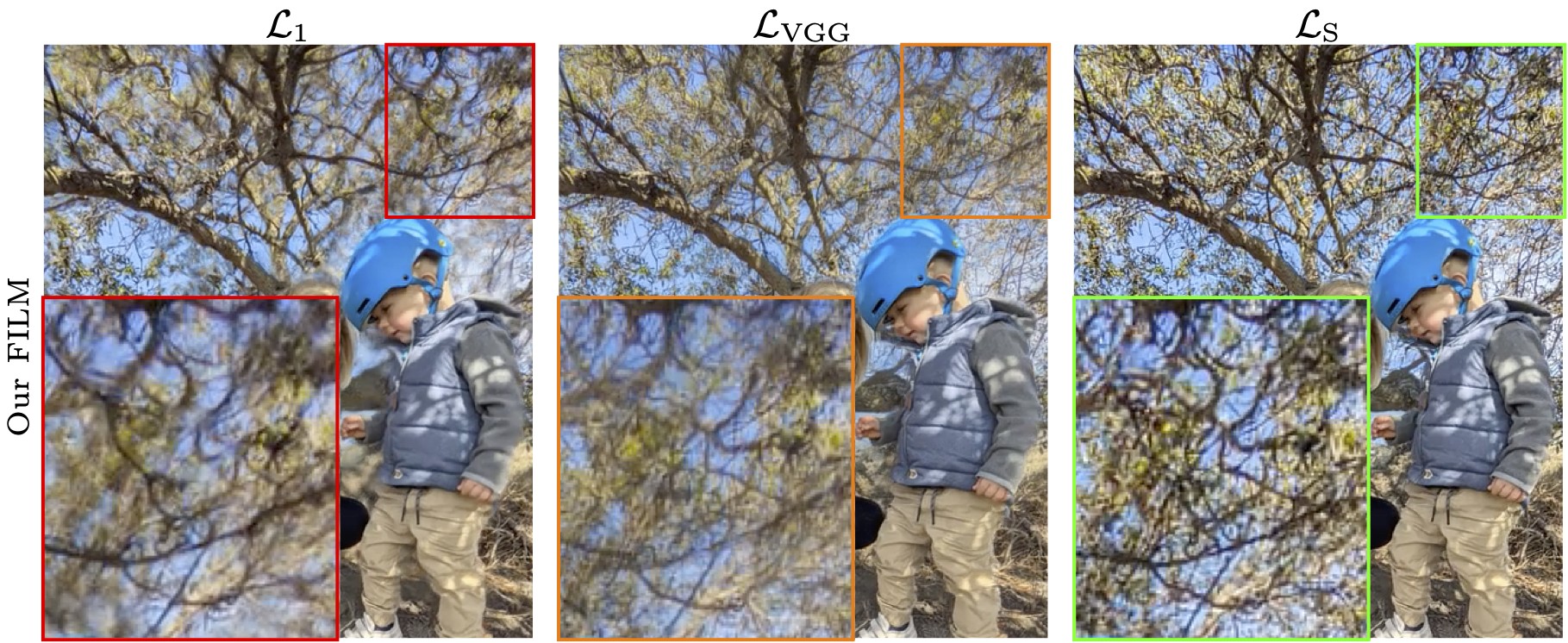

Loss Functions Ablation

FILM Architecture Overview

Video

BibTeX

@inproceedings{reda2022film,

title={FILM: Frame Interpolation for Large Motion},

author={Fitsum Reda and Janne Kontkanen and Eric Tabellion and Deqing Sun and Caroline Pantofaru and Brian Curless},

booktitle = {European Conference on Computer Vision (ECCV)},

year={2022}

}